TL:DR Version: Windows 10 Express Updates in ConfigMgr are better than they used to be, confusing as heck sometimes, don’t always work as advertised, but may just finally be useable! This is a test of functionality in CM1802 – using BranchCache, Peer Cache and Delivery Optimization.

Here Again?

There’s been a lot of chatter recently in the Twittersphere and elsewhere, regarding Windows servicing in general, and some of the issues that can arise. One of those issues is of course the problem of shifting those large Cumulative Updates around the network, both from Primary to DP (intra-site traffic) and from DP to client.

We are of course massive fans to anything that can reduce WAN OR LAN usage – think DeDupe, Peer-to-Peer etc, but today I’m going to give you the run down on another weapon in the ‘Arsenal of WaaS’ – Express Updates.

You’re probably aware that Express Updates are a kind of ‘Delta Update’ technology, designed to reduce the payload to the client as far as possible by only delivering the exact bits n bytes that the client needs to reach a certain level of Updated-ness.

What I’ve done here is to get into the nitty-gritty of how these updates are delivered, how you can improve performance even more by using Peer-To-Peer tech, some pitfalls and tips that will hopefully help you decide if this tech is one that you want to use.

There’s even a couple of scripts to help you to monitor the results. Dang we’re good to you!

Size Isn’t Everything. Oh really?

Now the elephant in the room for some folks is the sheer size of the Express Updates. That is the size of the content that needs to be downloaded to your Primary/CAS and then shunted out to your Distribution Points. That has been covered elsewhere so I’m not even going to go there – if you want to harvest the benefits of Express at the business end (Clients) then you’ll just have to get over it and pay yer dues on the server end of things..

Technologies in Play

For this test, I enabled ConfigMgr Peer Cache, BranchCache, and Windows Delivery Optimization (not optional) – just to show/which Peer-to-Peer and caching technologies would/could help with further enhancing Express Update efficiencies.

I threw together a couple of scripts in order to parse the BITS event log for BranchCache/Peer Cache results, and a simple Delivery Optimization Monitor to see what DO had to do with it all. You can download them and have a play yourself - here: [wpdm_package id='23987']

The Test

For this test I selected the 2018-04 Cumulative Update (x64) ( KB4093112)which came in at a hefty 5.12GB of WSUS/DP content.

This includes the full (non-Express) CU, which is there in case a system can’t perform an Express installation (it happens).

The Non-Express version of the CU in this case is 738Mb

How it Works

Express Updates for Windows 10 with ConfigMgr work in a slightly convoluted way.. Basically Delivery Optimization is the Requesting Application, and that request is then handled by BITS via a local proxy which listens on port 8005. So, because BITS is used, you’re looking at potentially utilizing BranchCache and Peer Cache. What isn’t widely known however is that Delivery Optimization is also capable of pulling content from peers at the same time – so you need to make sure that DO is configured correctly too. Otherwise you could be unwittingly pulling content from peers far far away in a distant WAN.

Deep Dive!

The Update itself consists of a .psf file (4.38Gb), which is where all the binaries are stored, and a .cab file (19.4Mb).

So when the Express install starts, the ConfigMgr client first downloads a stub (the .cab file), which is part of the Express package.

This stub is then passed to the the Windows installer, which uses the stub to do a local inventory, comparing the deltas of the files on the device with what is needed to get to the latest version of the file being offered in the update. You might see TIWorker.exe getting jiggy at this point as it scans ALL the binaries affected by the update. It was using about 20-30% CPU at times on my test machines.

The Windows installer then requests the client to download the ranges which have been determined to be required. This is the important part – it’s only downloading byte ranges instead of each full binary.

You can see this activity on the DeltaDownload.log on the client (below)

The client downloads these ranges and passes them to the Windows installer, which applies the ranges and then determines if additional ranges are needed. This repeats until the Windows installer tells the client that all necessary ranges have been downloaded.

As BITS is doing the heavy lifting here, we can look at the BITS job (Using BITSAdmin.exe) and see that it is batching a number of ranges into a single job. (below)

Note: This is a big improvement over previous iterations of the way that ConfigMgr handled these ranges. In previous releases each byte range was packaged into a single BITS job, which was both resource intensive and slow. (Each BITS job has to be created, monitored etc then completed and initiates an http(s) connection to the DP) In my previous testing there were well in excess of 1200 BITS jobs for a CU and it took anything up to 4 hours to download and complete. Not cool.

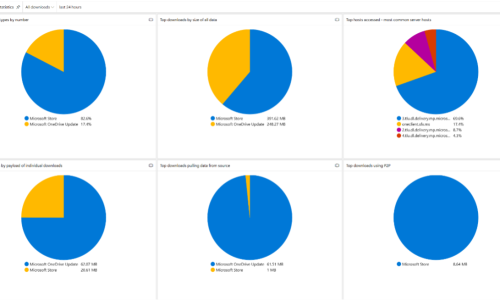

The Results Are In

The first machine in the test had the following results. These are the results from parsing the BITS event log with PowerShell to time and monitor P2P activity.

Initial Stub Download

Job Running for 0 Hours 0 Minutes 17 Secs

MegaBytes Transferred 19.46 MB

MegaBytes From Peers 0.00 MB

Percent From Peers 0.00%

Download Speed 9.2071 Mbits/S

Obviously, this is all coming from the DP as there are no Peers Locally with the content yet.

Then, there is a pause of approx.. 6 minutes. This is while the local machine is being scanned to see exactly which byte ranges need to be downloaded. Bear in mind that this test was performed on a VM so physical hardware may well perform better.

Once the local scan has been performed – the appropriate byte ranges are bundled into BITS jobs and downloaded.

Final Score

Job Running for 1 Hours 2Minutes 7 Secs

MegaBytes Transferred 239.27 MB

MegaBytes From Peers 0.20 MB

MegaBytes From Source 239.07 MB

Percent From Peers 0.08%

Download Speed 0.4911 Mbits/S

Number of BITS Jobs 612

Wait – Bytes from Peers? Yes, there was a tiny amount of P2P magic, but in reality that’s BranchCache at work, and it found some duplicate blocks of data in the cache that it was able to use twice and avoid re-downloading. We love BranchCache when it does that..

As you can see – the data downloaded is a massive saving over the full 738Mb Download at just 239Mb. And the download took 1 hour, which isn’t too bad. So even without ANY Peer-to-Peer in play - there's a saving to be made here. But it gets better with BranchCache (doesn't everything?)

Subsequent downloads

Once that first machine has cached the download obviously you get even more savings by utilizing Microsoft Peer-To-Peer tech. Lets see how that goes..

The first (stub file) download is pretty quick – and grabs 95% of content via Peer-to-Peer from the first machine.

Job Running for 0 Hours 0 Minutes 9 Secs

MegaBytes Transferred 19.46 MB

MegaBytes From Peers 18.46 MB

Percent From Peers 94.86%

Download Speed 16.8985 Mbits/S

Number of BITS Jobs 2

So, on to the main course. My wee Powershell script recorded the following.

Job Running for 0 Hours 50 Minutes 4 Secs

MegaBytes Transferred 240.96 MB

MegaBytes From Peers 172.04 MB

MegaBytes From Source 68.92 MB

Percent From Peers 71.40%

Download Speed 0.6008 Mbits/S

Number of BITS Jobs 539

The script also notes the URLs from which content is downloaded, and note here that ConfigMgr Peer Cache comes into play

536 http://xxxxxxxxx.2pint.local:80/SMS_DP_SMSPKG$/Express_355e0e08-2c4b-416d-88fd-78559798c100/sccm?/windows10.0-kb4093112-x64.psf

1 https://xxxxxxxxx.2pint.local:8003/SCCM_BranchCache$/express_355e0e08-2c4b-416d-88fd-78559798c100/sccm?/windows10.0-kb4093112-x64.psf

1 http://2PSCM01.2pint.local:80/SMS_DP_SMSPKG$/Express_355e0e08-2c4b-416d-88fd-78559798c100/sccm?/windows10.0-kb4093112-x64-express.cab

1 https://xxxxxxx.2pint.local:8003/SCCM_BranchCache$/express_355e0e08-2c4b-416d-88fd-78559798c100/sccm?/windows10.0-kb4093112-x64-express.cab

Sadly, if you look into this a little deeper – you notice that Peer Cache only get 1Mb and then fails (this happened on every single machine that I tested). It seems that Peer Cache is unable to service byte-range requests. In the Event log this is evidenced by Event 202 – “The web server or proxy server does not support an HTTP feature required by BITS “. On the Peer Cache source machine we see the following in the CAS.log - HttpSendResponseEntityBody failed with error 64.

So at that point it falls back to the Distribution Point, but fortunately BranchCache takes up the slack for the remainder of the downloads.

So you can see that the total download from source is just 69.92MB in total. Quite a saving now!

If you extrapolate that over just 10 machines the results is pretty nice:

Results across 10 Systems

1 x 239.27 (Initial Download)

9 x 68.92 = 620.28MB

Total: 859.55MB

So - An average of 85.9Mb per client for a CU of 738Mb? Not too shabby!

What about DO?

So what about Delivery Optimization? Well interestingly DO can come into play – and I did see this during testing. Once the content is downloaded – it goes into the DO Cache (Even though it was downloaded via BITS) and becomes available to other peers.

Once that happens, you can have a download where content is coming via both BranchCache Peer-to-Peer AND from Delivery Optimization Peers. This is important because you need to configure DO to make sure that it isn’t pulling that Peer content from some far-off galaxy via your inter-galactic WAN links.

We do that DO Config Automatically in our StifleR product – or as of 1802 you can use the Delivery Optimization Client Settings (below) to configure Group Mode (providing that your boundary groups are in order), but whatever you do, don’t ignore it!

Local Data Storage

With all of this content being shunted around, what is the actual impact on the client-side as far as storage goes?

Well – During the download there is a lot of temporary shenanigans while things are pieced together. Data ends up in the BranchCache cache, CCMCache folder, AND Delivery Optimization Cache. Overall though, the free space on my test systems only reduced by 3.2 GB which, given the original payload, isn’t too bad. This isn’t necessarily a true figure either, as Express Update files on the clients are the same as they are on the server – but only certain portions of the files are actually populated, so the actual size on disk is smaller.. Here's the temporary folder in action in the CCMCache

And here's the CCMCache - note the Size on Disk

Summary – Tips – Tricks

Peer it! No excuses for not using Microsoft Peer-to-Peer tech with Express Updates as it can use any or all – without any effort from you! All you need to do is to make sure that you have BranchCache, Delivery Optimization and/or Peer Cache setup correctly.

As with everything new – we recommend that you test test and test again. You can download the test scripts that I threw together for this blog here – then you can do your own timings on your own systems.

It didn’t work every time though..

In some instances, the Express Update is not used, and it reverts back to using the full CU update (738Mb). Not sure what was controlling this behaviour but it seemed that the DeltaDownloader component timed out… more testing need there – any volunteers?

Track your Express Updates in Real Time with 2Pint StifleR - We monitor Express Updates in our real-time dashboards - download a trial copy now or request a demo.

Finally – you don’t want to use Express Updates any more? Good luck with turning them off ?

Lots of folks have been reporting that Express Updates are proving ‘really really’ hard to turn off, although this is supposed to be fixed now. So here’s some steps to try if you’re having that issue. I grabbed these from the Comments in the ConfigMgr docs – before they were nuked in the switch to the new feedback system! Thanks to whoever posted them …apparently these are the steps recommended by Microsoft PSS but as ever – use at your own risk and test test test!

There were two action plans suggested by the engineer to be completed in order.

Action plan 1.

- Disable the express updates.

- Run updates synchronization task in SCCM console.

- The express updates would be shown grayed out after running summarization.

- Delete those updates from existing update groups and packages.

- Update all distribution points with the respective packages.

- Run ContentLibraryCleanup tool (taken from the SCCM SDK) against all distribution points (Script it and run it. But it works only after a successful replication of the package on the respective distribution point.)

Action plan 2:

Cleanup WSUScontent folder and do WSUSUtil.exe RESET will re-download the content for all approved updates.

Steps to be followed:

- Disapprove any unneeded updates.

- Close any open WSUS consoles.

- Go to Administrative Tools – Services and STOP the Update Services service.

- In Windows Explorer browse to the WSUSContent folder (typically D:\WSUS\WSUSContent or C:\WSUS\WSUSContent)

- Delete ALL the files and folders in the WSUSContent folder.

- Go to Administrative Tools – Services and START the Update Services service.

- Open a command prompt and navigate to the folder: C:\Program Files\Update Services\Tools.

- Run the command WSUSUtil.exe RESET -This command tells WSUS to check each update in the database, and verify that the content is present in the WSUSContent folder. As it finds that the content is not present in the folder, it executes a BITS job to download the content from Microsoft. This process takes quite a bit of time and runs in the background.

Happy Express Updating!

Phil 2Pint

Doris The Bot..

Doris The Bot..