We spend a lot of time at 2Pint working with networking. As our home page currently

indicates, that is "no matter the network condition, infrastructure,

cloud, or vendor." Nothing beats real-world experience with this stuff, but it's still useful to be able to simulate something that is at least close.

So what sorts of things do we want to simulate? A few examples:

- Bandwidth (a.k.a. throughput). How fast can the network medium move bits.

- Round-trip time. Regardless of the network speed, sending data between two devices takes time, based primarily on the physical media and distance between devices.

- Reliability. Packet loss and other network transmission errors will at least slow the whole process down, but depending on the protocol it could cause outright failures.

On a local network, bandwidth is high, round-trip time is low, and reliability is great. So what we really want to simulate are WAN links. So now we're talking about traffic being routed between different networks. Hence we need a router that can manipulate the traffic more closely look like WAN links.

I initially tried working with pfSense in a Hyper-V VM, routing and shaping traffic between two Hyper-V networks. But no matter how hard I tried, pfSense had performance issues on Hyper-V. There are lots of suggestions on working around these (e.g. going back to older versions, changing NIC settings, etc.) but these weren't working reliably for me.

So onto plan B: OPNsense. It's a fork of pfSense created in 2014, so it diverged some but it has many of the same capabilities. Both use a dummynet (ipfw) tool for traffic shaping, and are very similar when it comes to configuration.

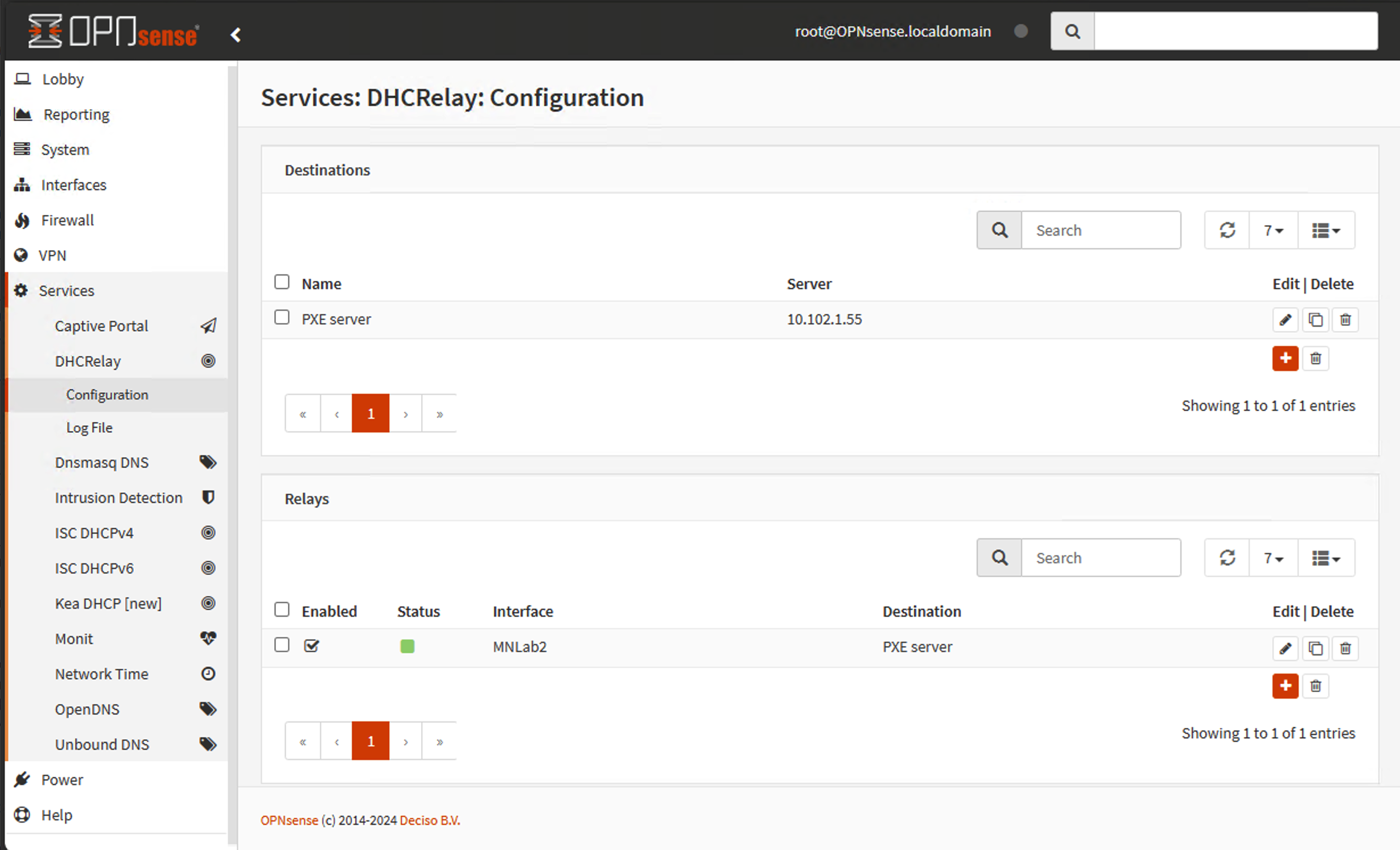

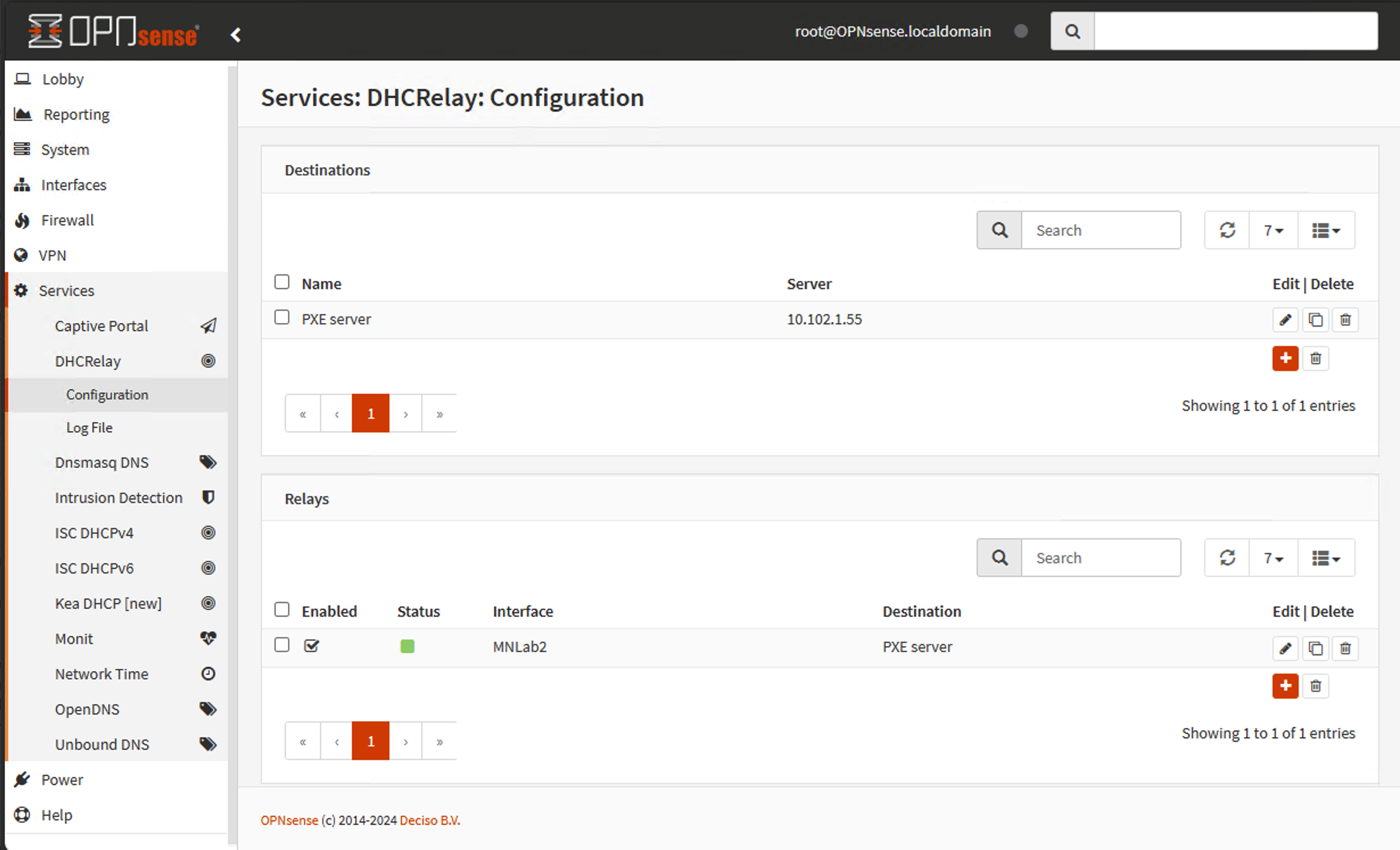

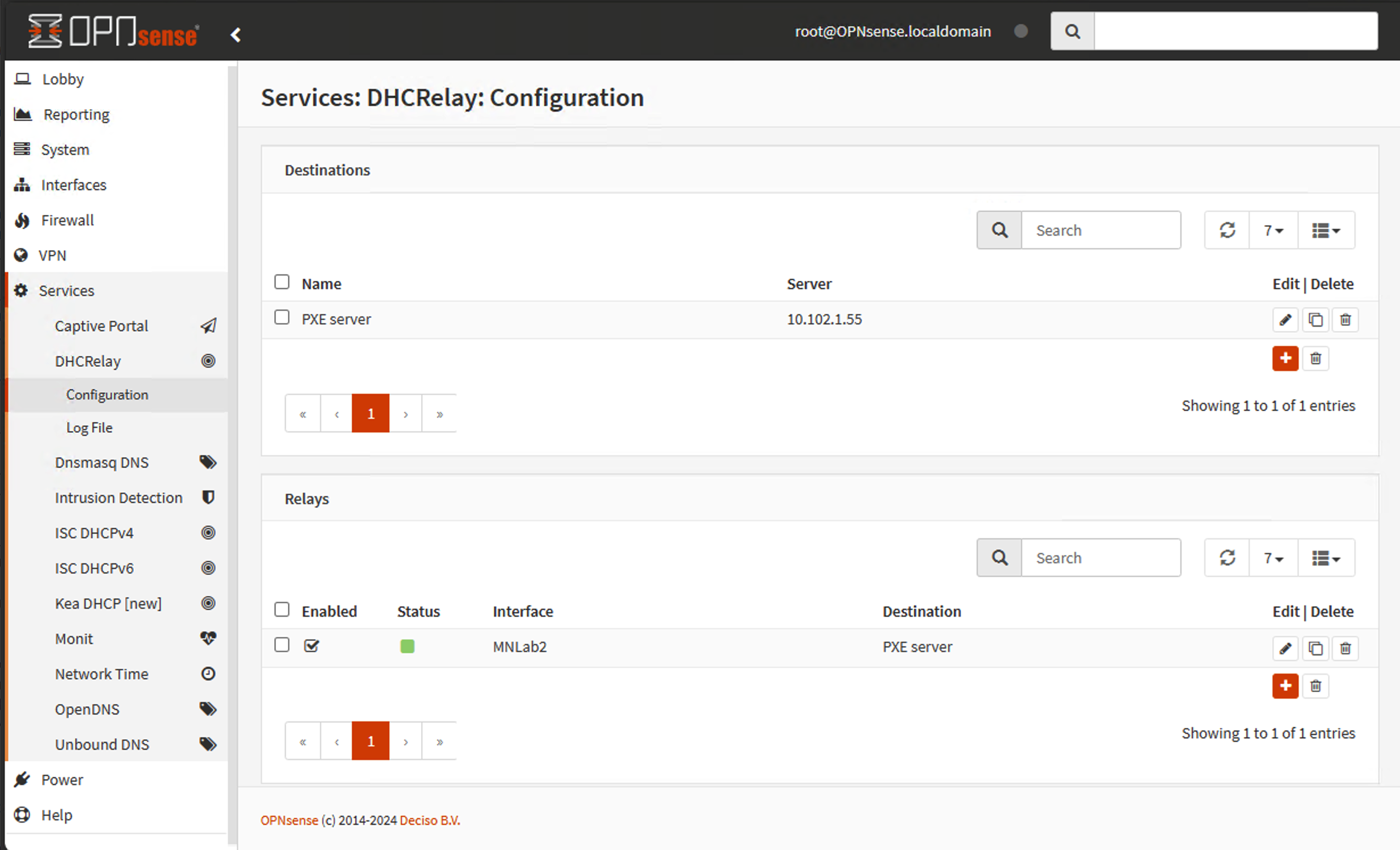

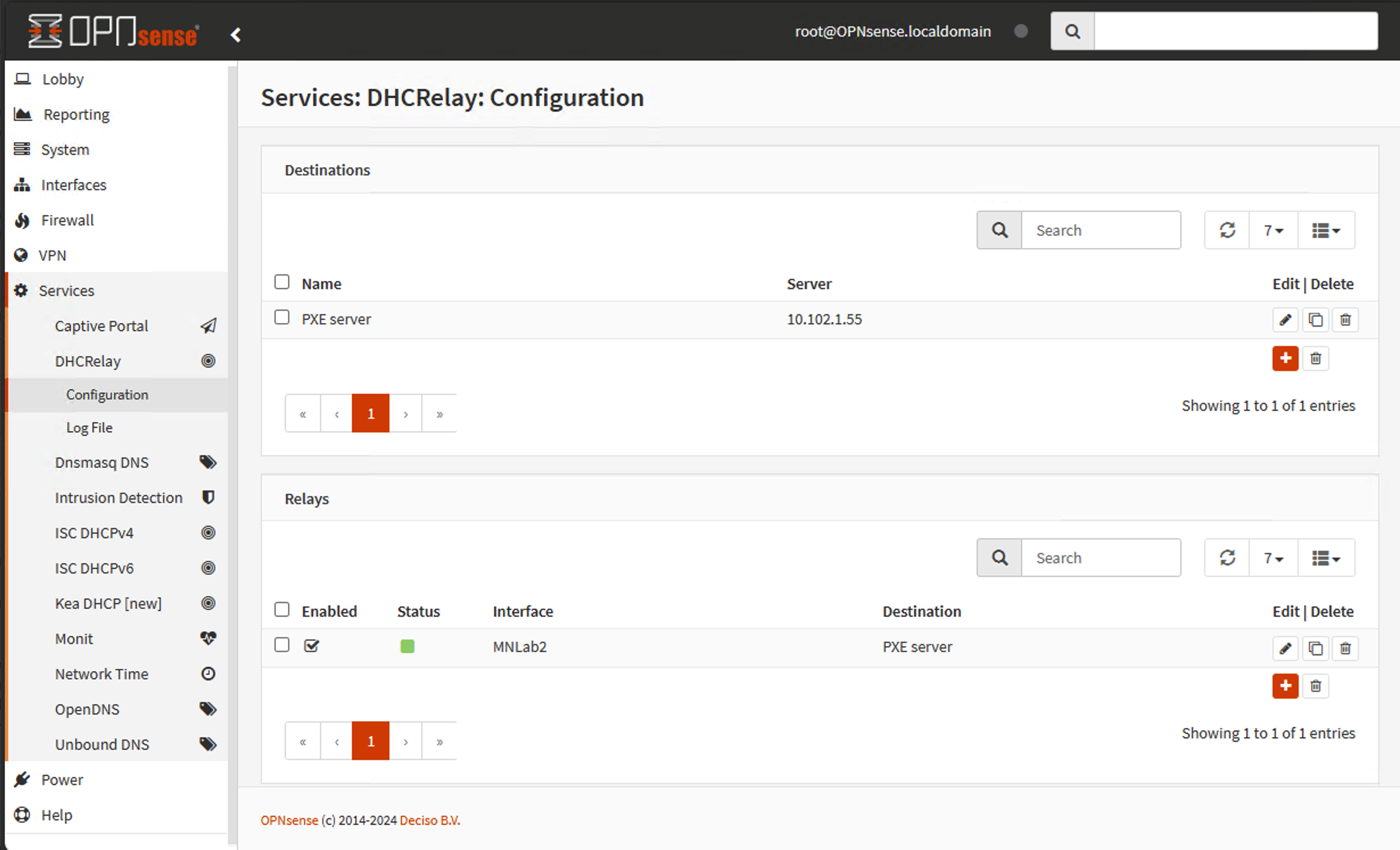

First, you need to have two networks in Hyper-V, and have OPNsense configured to route between them. In my case, I found it helpful to create a DHCP relay between the two so that my existing DHCP and PXE servers on network #1 (10.102.1.0/24) could also service network #2 (10.102.2.0/24) without much work.

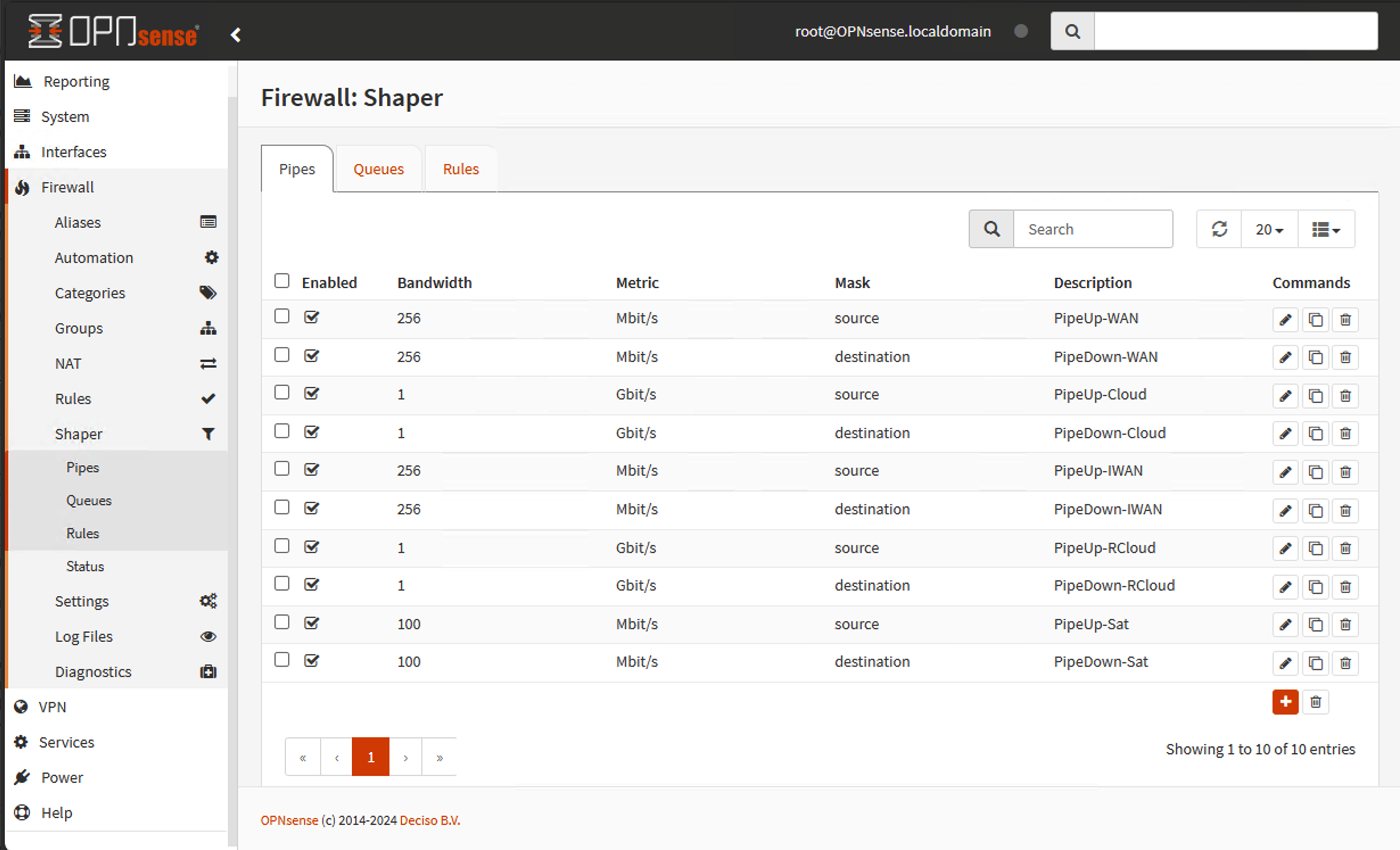

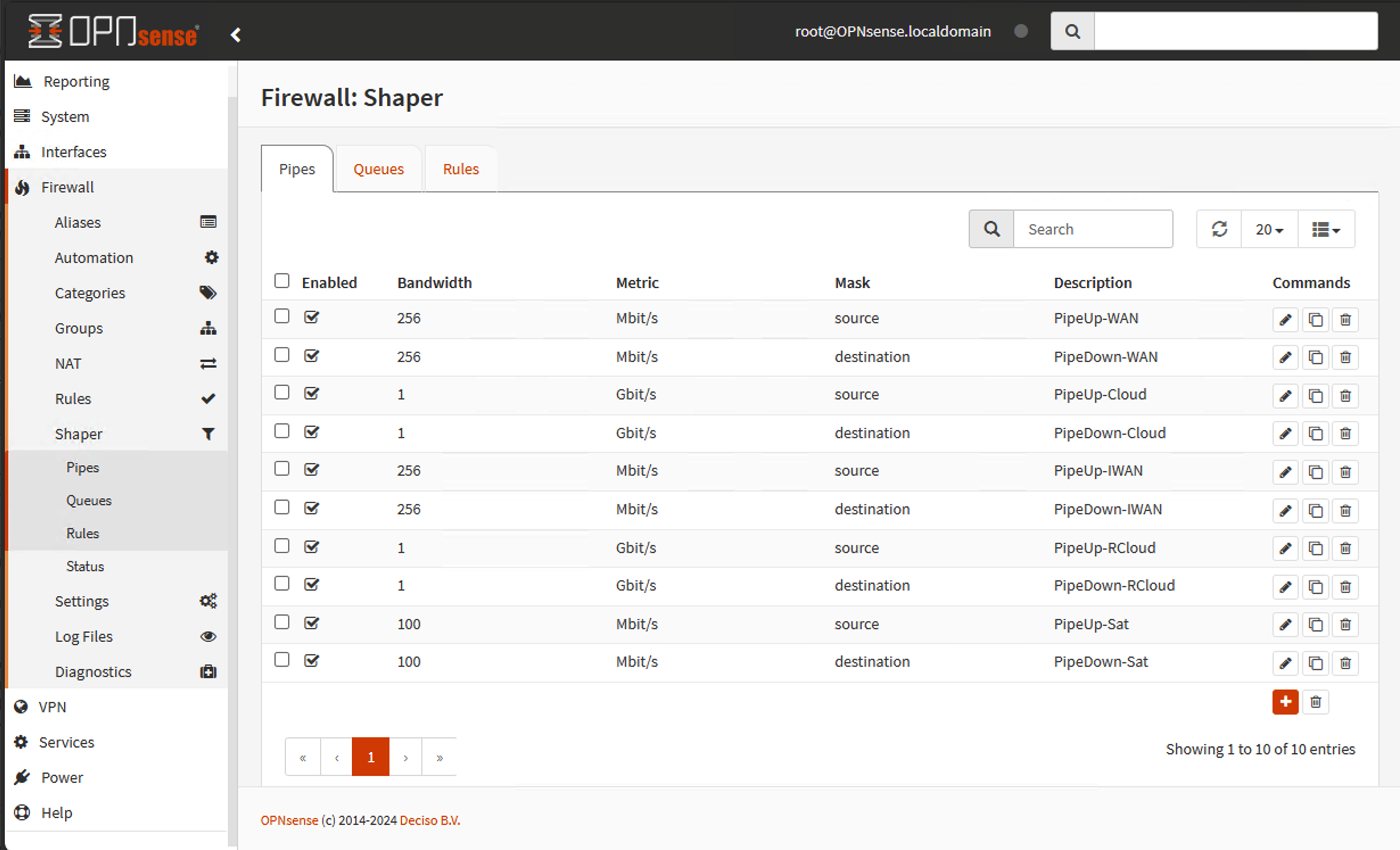

Next, I can define pipes for each type of network that I want to simulate. In my case, I have a variety:

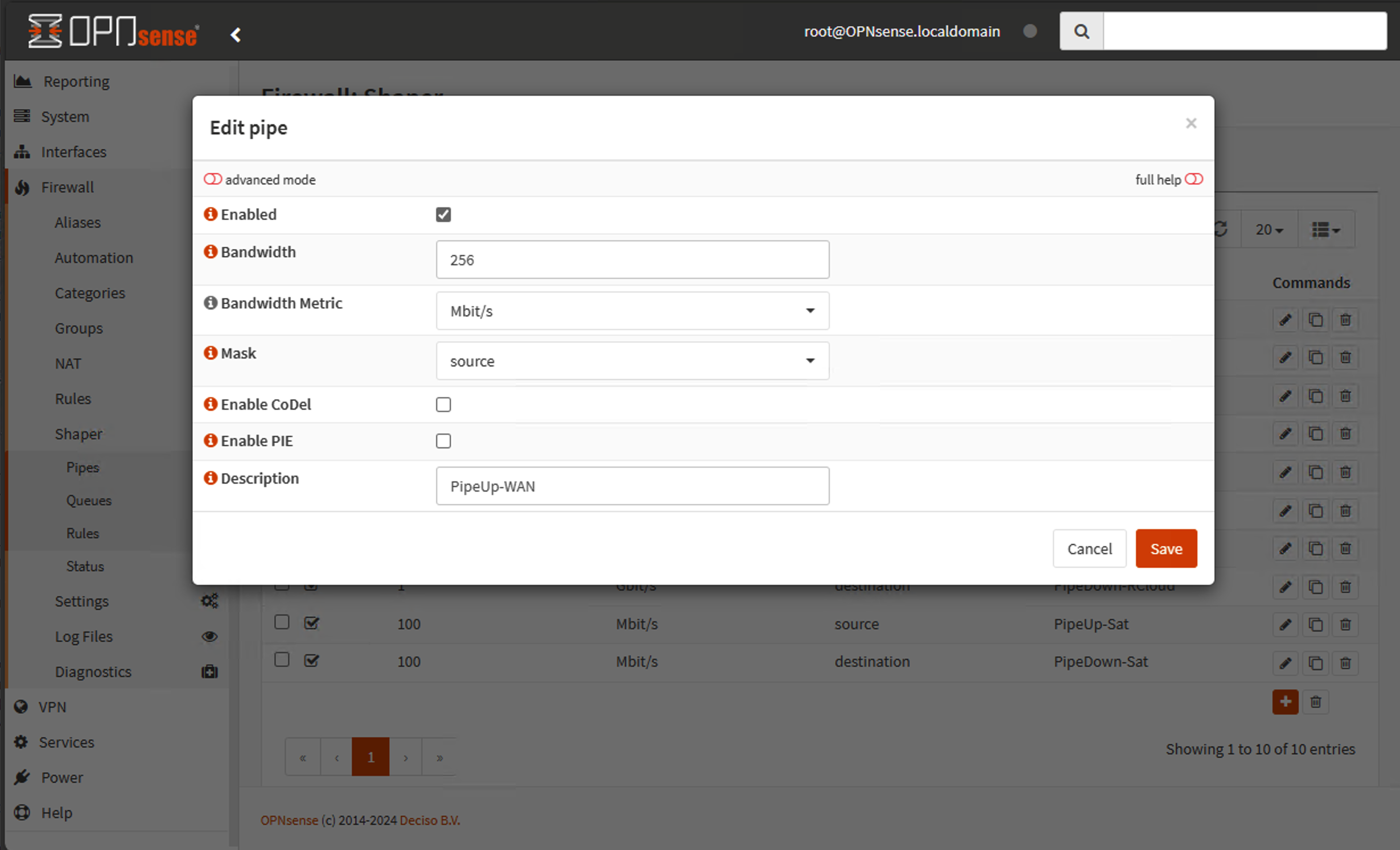

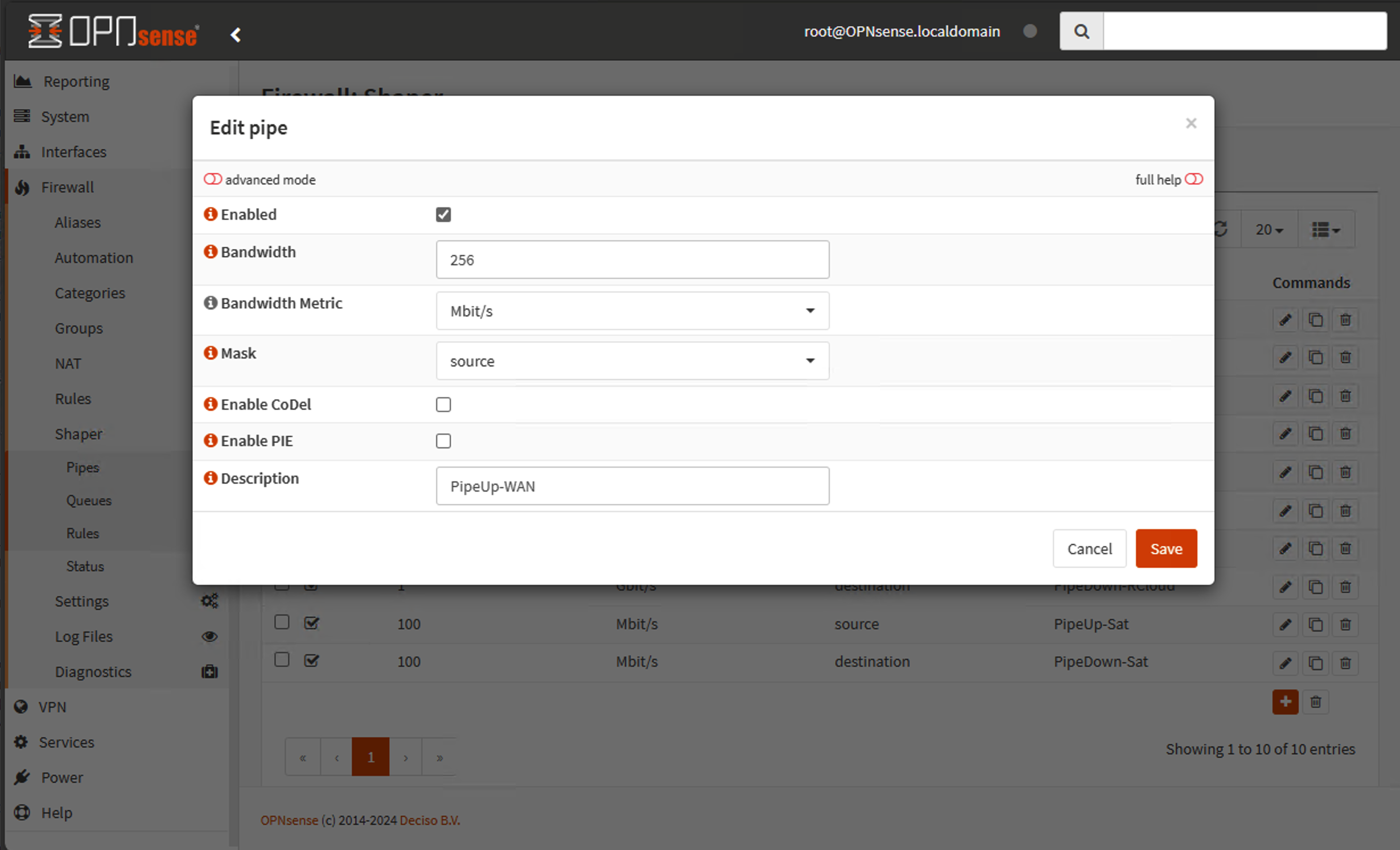

You have to configure each direction (up, down) separately. The basic configuration is fairly simple:

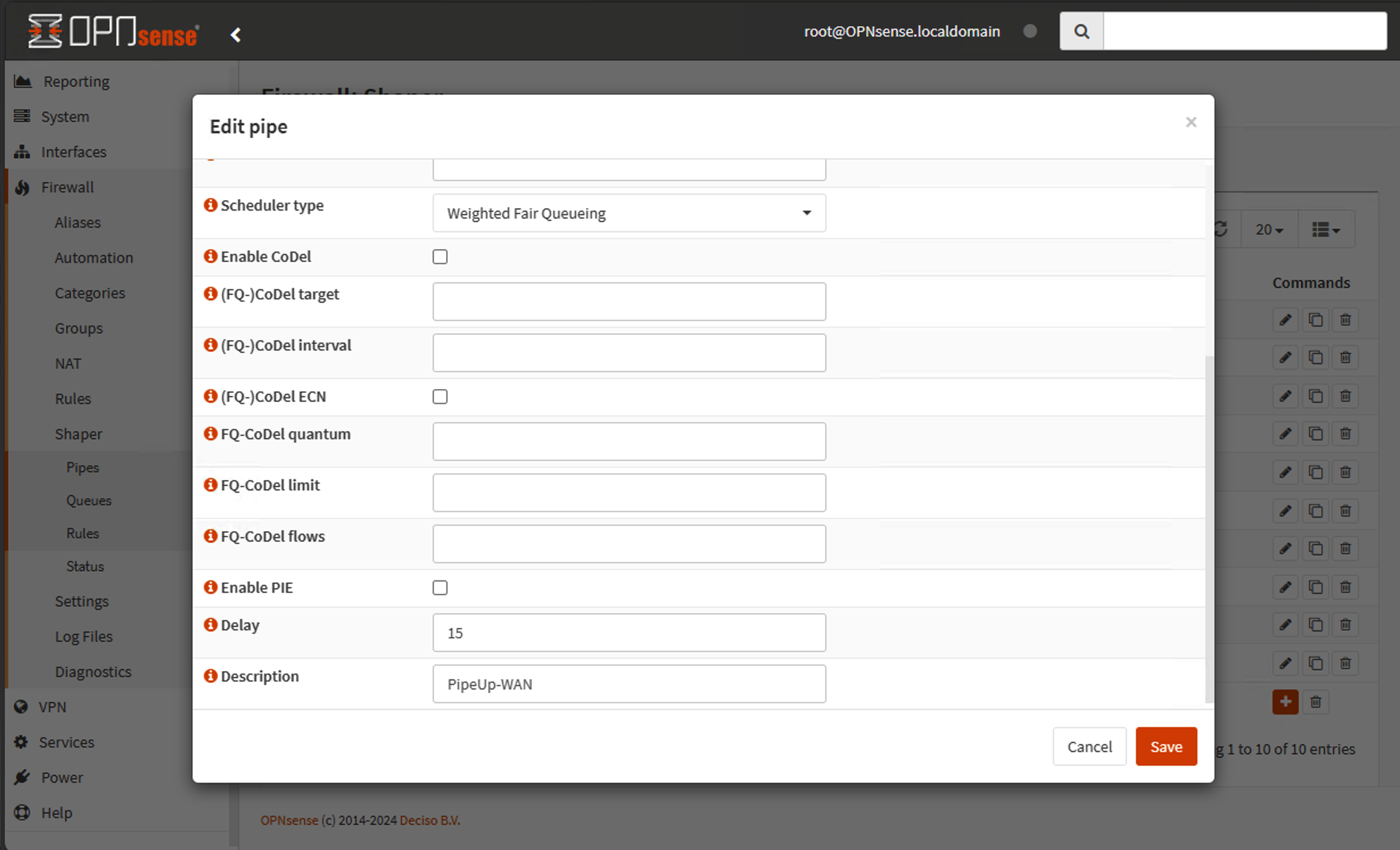

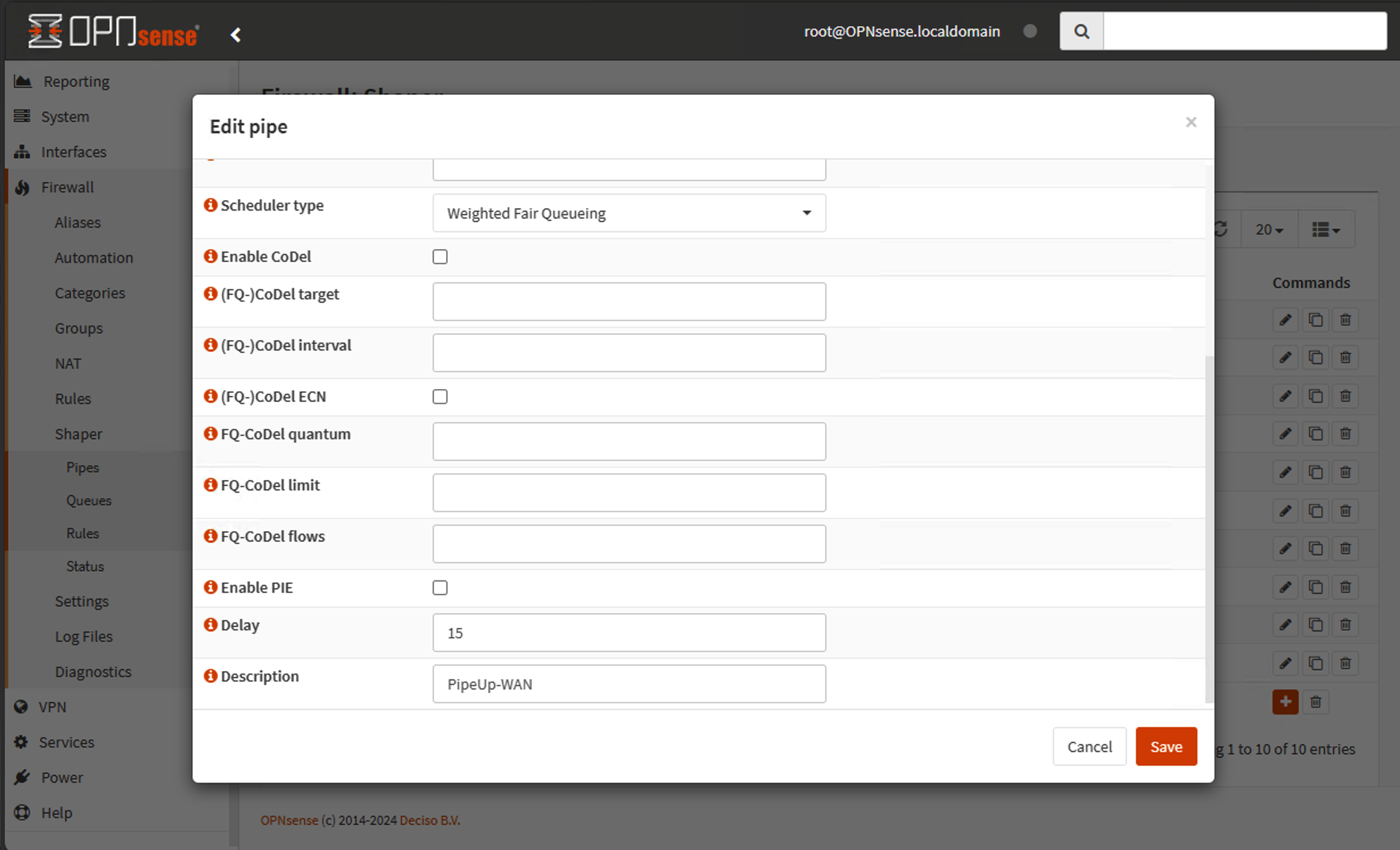

That specifies the bandwidth limit (256Mbs in this case) and the direction (source), and gives it a name (PipeUp-WAN). But there is one other important detail that you can only see if you select "advanced mode":

The key value there is "Delay." That specifies the number of milliseconds that the traffic should be delayed. That's what simulates a true WAN link (more on that later).

Next, you need to do the same thing for the other direction, changing "up" to "down" and "source" to destination.

With the pipes created, you can now assign them to rules that specify how those are used on a network interface. Since I am just using one network and changing the pipe definitions for whatever I'm testing at the moment, I only need two rules for my second network segment; I can change these as needed.

Each of these rules specifies the source and destination, as well as the target pipe.

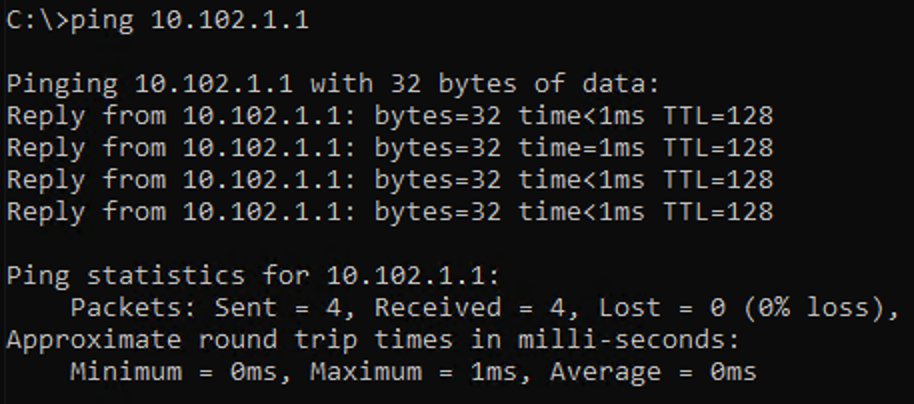

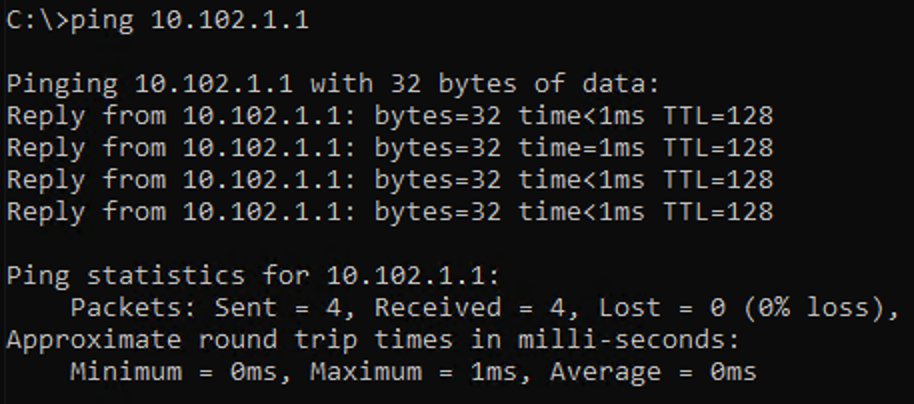

With those configurations in place (and applied so that they take effect), you can then see results. If I ping my server from network #1, with no rules or pipes in place, I see very fast responses, with 1ms (or less) response times:

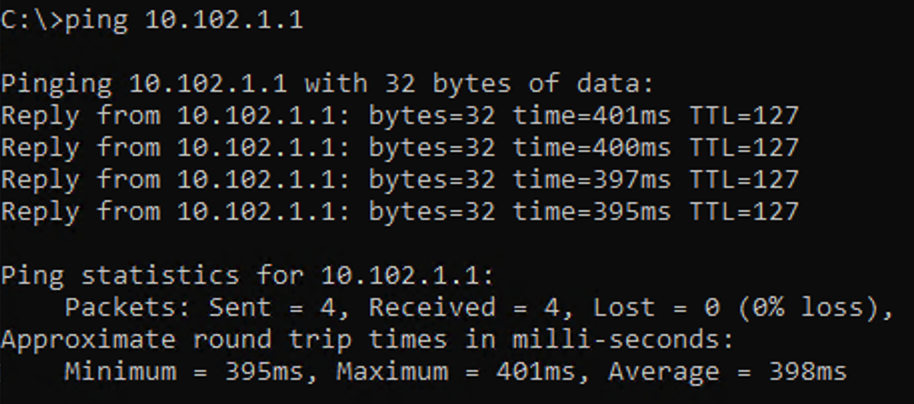

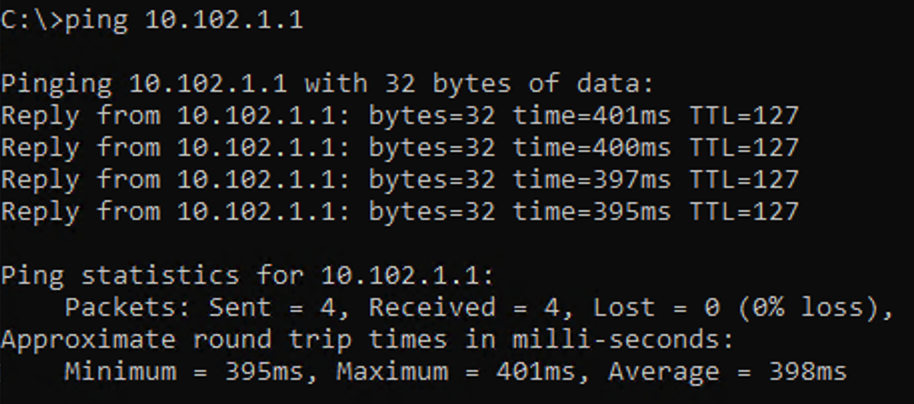

But if I do the same thing from the second network, in this case with a "satellite" pipe defined, the results are quite different:

Notice that I didn't do anything around packet loss. While the underlying ipfw component supports this, the OPNsense UI doesn't expose an option for configuring this. So for now, I'm going to ignore that piece -- my network is going to be perfectly reliable. I'll try to hobble it later.

So everything is now configured, but it's worth spending a little more time on the different types of networks that I've defined. Here's my list:

|

Type

|

Bandwidth

|

Round-trip time

|

|

LAN

|

--

|

--

|

|

WAN

|

256Mbit/s

|

30ms

|

|

Local cloud

|

1Gbit/s

|

60ms

|

|

WAN

inter-continent

|

256Mbit/s

|

100ms

|

|

Remote cloud

|

1Gbit/s

|

190ms

|

|

Satellite

|

100Mbit/s

|

400ms

|

They are somewhat arbitrary, especially when it comes to bandwidth -- you can often buy any speed that you are willing to pay for. But there is some logic behind the round trip times. Let's look at the "WAN" example first. It has a 30ms RTT value, which equates to 15ms one way and 15ms back. Let's assume that's a fibre connection operating at the speed of light. How far will light travel in 15ms? A rough guideline that I have seen in multiple locations (e.g. this one) is that light travels 124 miles per millisecond through fibre. So the two locations would be about 1860 miles apart. That's roughly the distance from Minneapolis to Miami -- not an unreasonable distance.

What about cloud services? Fortunately, vendors like Microsoft (Azure) and Amazon (AWS) publish data about this:

And there are tools that will measure the time from your location to various cloud regions:

So the values I am using are based on the both the vendor data and the measurement tools. (Thinking about hosting something in the cloud in the US and accessing it from Europe? Latency is a major concern.)

Lastly, there are satellite links. Traditionally, these have had long round-trip times due to their high orbits around the earth, which causes all sorts of challenges. But new low-earth-orbit satellites like those from Starlink are much more reasonable. But with times similar to the "local cloud" values in my table above, I didn't need yet another entry in the table for that.

So what do we do with all of this? Well, that's the subject for another post...