Including the 10 Golden Rules of Software Distribution!

Johan wrote a great article blog two days ago

(https://2pintsoftware.com/picking-the-right-compression-algorithm-for-branchcache/ ) about the best format for distributing drivers as part of the OSD process. This blog is a continuation on that subject, but covers more the delta aspect between different versions of the same package. So lets say you have Adobe 10 and want to go to Adobe 11, what's the best way of dealing with this? I did cover parts of the way that BranchCache deals with deltas in my BranchCache series of talks for Ignite 2016 and 2017, but I think it's time for a recap!

So instead of dwelling too much into the technical details (Yeah, fat chance!) this blog is more of a "this is what you should do" as opposed to "this is all the things you could do"!

The Options

Basically we have two good options, .wim with fast compression and .zip with fast compression. Everything else pretty much sucks at delta between versions, so let's stick with those.

And although the other, more aggressive formats can save a few gigs on the server storage side, you will lose them on the network transfer side (and client caching side)!.. and as the Oracle corporation says: "Disk is cheap!".

Hmm..lost already I hear you say - how can wasting server disk space save bandwidth and cache storage?

Le Devil as they say is in Le Details...

So, lets try to explain this... We have a package, lets call it version 1. It consists of data right!? You with me so far? Good. BranchCache will then split this into chunks. We assume you are on Windows 10 here, so the BranchCache version is 2. (We wont bother with Version 1). BranchCache will then "chunk" this data into blocks, that it finds likely to be the same/different in other versions or other files. Sounds like magic? Yeah, it's pretty much just that. Written by some smart people this shit was, I am telling yah! So the BranchCache "chunking" logic is the same one that Windows Server uses for chunking data in it's DeDuplication's cache. DeDupe on a server stores it's chunks in VSS and then just hard links the blocks from the files to the chunks in VSS. The Windows client however, stores it's blocks in BranchCache (as VSS is not really in play for clients). So back to our version 1 of the package. It contains data blocks labelled as A, B, C, D, E ,F ,G, H, K, L, M, N, O, P, Q, R , S , T, U, V. BranchCache then parses this, and creates hashes for each block, checking to see if it already has one for it. In this case, the outcome of hashes looks like this;

Base file data blocks: A, B, C, D, E ,F ,G, H, K, L, M, N, O, P, Q, R , S , T, U, V

BC Hashes: A, B, C, D, E ,F ,G, H, K, L, M, M, O, P, Q, R , S , T, U, V

What happened to the letter N? It's gone from the BC hashes above and replaced with M!!! Yeah, 'cause BranchCache saw that N was actually the same data as M, so it's just pointing back, so the number of unique blocks are now;

Base file data blocks: A, B, C, D, E ,F ,G, H, K, L, M, N, O, P, Q, R , S , T, U, V = 20 unique blocks

BC Hashes: A, B, C, D, E ,F ,G, H, K, L, M, M, O, P, Q, R , S , T, U, V = 19 unique blocks

So if we then distribute this data to a client, it will then download 19 unique blocks, as the M and N blocks are the same. So no need to download twice. As the data is the same, it stores 19 blocks in the BranchCache client database. Awesome saving already.

Now here comes version 2 of the package, and it's a wee bit different:

Base file data blocks: Q, B, C, D, E ,F ,G, H, K, L, M, N, O, Z, Q, R , S , T, U, V

So what happens now to the BC blocks? Well, it will find the delta and create a new base block entry, as we have some of the blocks already calculated, we only store new/changes items:

BC Hashes: Q, Z = 2 new unique blocks only, the rest of the file is the same! Wohoooo!

So, if we were to distribute version 2 to a client that already has version 1 in it's BranchCache Cache, it would only download 2 friggin' blocks, Q and Z, and then reconstruct the whole file using the already downloaded data. Epic savings. So the savings really depend on how the files are changed in the source package here. BranchCache is typically very good at finding deltas in the files themselves, so we need to HELP BranchCache with this, by not throwing spanners into the works.

When Zip Turns To...

This is where the fun part starts, now imagine that some schmuck decided to compress the crap out of his source package, using a very complicated and great algorithm. What happens then to the data structure?

Well, it gets scrambled, so the original file is still like version 1, but as he changes things in version 2, the new hyper compressed file looks like this:

Base file data blocks: 1, 2, 3, 4, 5 , 7 , 8, 9, Å, Ä, Ö, #, @, &, !, ¤ , £, €, =, $ = 20 unique blocks again! WTF!?!

Well, so what will BranchCache do now? There are NO similarities in version 2 of the package. So we will just have to send the whole thing again.... So this means, for DeDupe on the server we have 20 + 20 unique blocks and 20 + 20 unique blocks on the client. And over the network we had to send 20 + 20 = 40 unique blocks (If you think this is crazily inefficient this is how all legacy P2P technologies for ConfigMgr operate today for all packages). Had we used a better way of packaging this up, BranchCache would have had a total of 22 blocks instead of 40. And yes, you would get these savings even if version 1 and 2 are different packages.

Tools For The Job

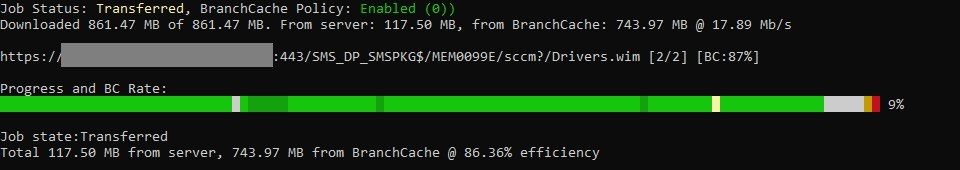

Lets see if a visual inspiration from some of our toolset (BCMon) can display this. This data is from a real world user of BranchCache who is intrigued by the effectiveness of BranchCache:

Empty cache, production driver package (this is the same as our version 1 package above):

As you can see there is a 6% saving on the file itself (remember M replacing N?) but most comes from the server itself. Basically, the gray bar indicates that content is coming from the source (as it should, cause this is the first download into an empty cache), then the other red to green indicates 'some' level of BranchCache ratio, where bright Green is over 95%.

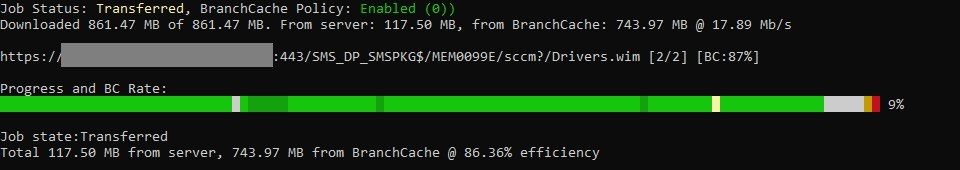

Next, pre-prod driver package (slightly different/updated):

As you can see, this package finds most of it's content already downloaded from the previous version, so it doesn't have to go over the wire.

So as some packages change very frequently, it's key that we learn how and what to distribute, because the savings can be enormous.

The Golden Rulez

So this is Andreas' Golden Software Distribution Rules (and God help anyone breaking them):

- If the source has multiple files (like above 10) then .zip the content using FAST or NOCOMPRESSION (No compression just binary combines all files into one file). Keep in mind files below 64K will not be BranchCached by default, but 10x 64K files in a .zip will!

- PowerShell uses OPTIMAL compression, not great, unless specified.

- Don't use default 7.zip! Nuff said. (It uses a different aggressive algorithm). You can use 7zip to create .zip with a low (or no) compression https://sevenzip.osdn.jp/chm/cmdline/switches/method.htm

- Make sure you see that -m to deflate or deflate64 (see comment below) and also set the x=1 on the command line.

- You also need to the fb={NumFastBytes} stuff

- You also need to set the correct pass={NumPasses} items

- Start to get the point now?

- Never use .wim OPTIMAL compression.

- Never use any other "cool" compression tools, they are likely to be very agressive.

- You can use .vhd instead of .zip if you want to binary combine and then not having to expand to disk.

- When deploying bastid apps, use .wim with FAST or no compression and mount and run install from mount point.

- When using .wim, use FAST as a base.

- When updating .wim files, adding files only adds to the tail (end of the file) and keeps most intact (but .wim grows in size). Then it updates the XML header in beginning file, still small delta.

- Use the same media for OSD bare metal builds as well as IPU upgrades. Patch media and rebuild often. BranchCache has you covered

Another key aspect is to monitor your P2P performance, it doesn't come granted that it "just works" and stays working. If only there was one such tool...

Doris The Bot..

Doris The Bot..